Tencent's Hunyuan AI Video is an open-source 13B-parameter model that generates high-quality videos from text with advanced motion and visual fidelity.

Hunyuan Video

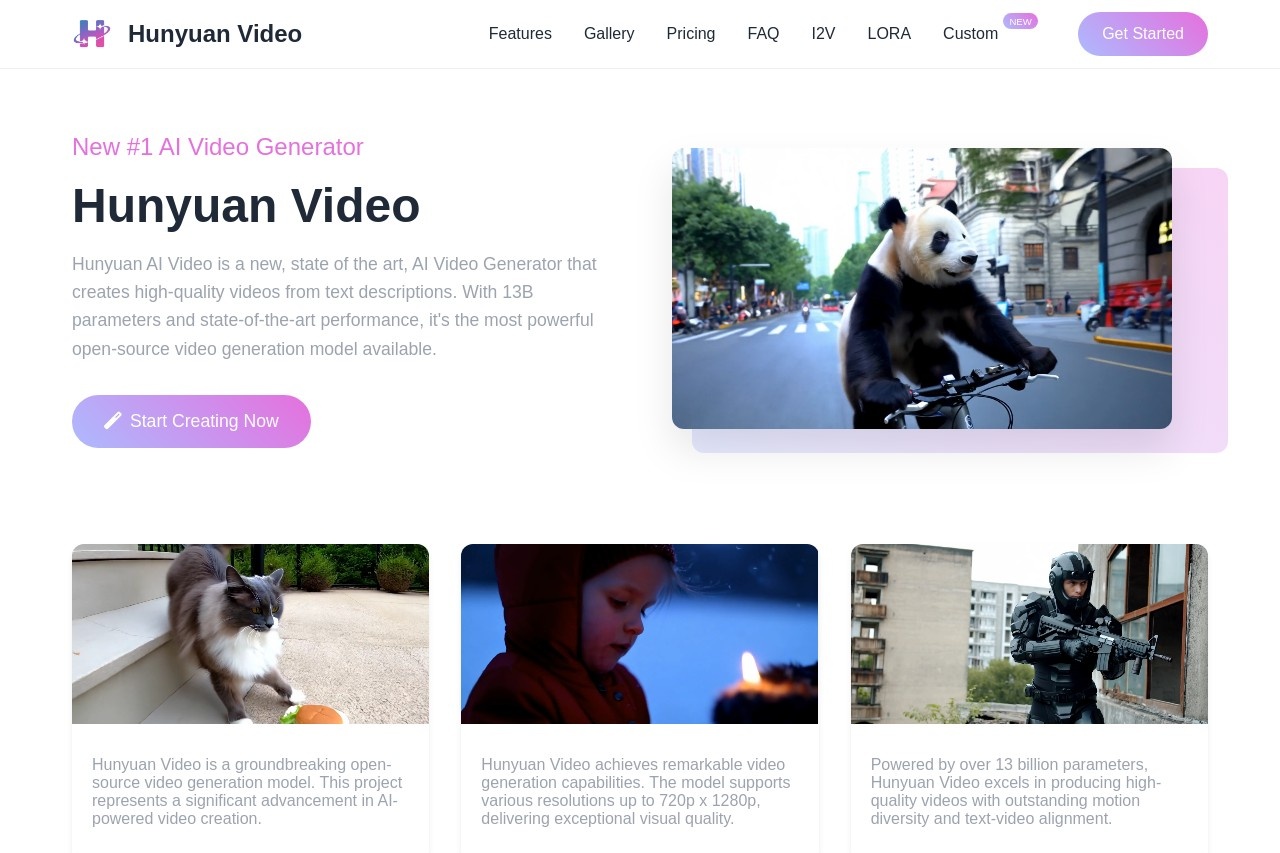

Artificial intelligence continues to break the limits of content generation, and Hunyuan Video by Tencent stands out as one of the most innovative assets in this area. Combining with 13 billion parameters, this open-source model is the first to create high-quality videos with rich motion dynamics and good picture quality through the simple application of text-to-video technology. This release is all-inclusive ������ with a further insightful look at the tool's functionality, case studies, and a detailed outline of the start-up process.

Introduction to Hunyuan Video

Hunyuan Video model is just one part of the whole Hunyuan AI chain at Tencent, the solution was custom-built to go head to head with other existing text-to-video models in the market. While the key separating feature of this release is that it is open-source, i.e., the core can be freely modified by developers and researchers for their specific purpose. The 13B parameter architecture allows it to describe very complex scenes while at the same time building consistency in the generated outputs.

The model is highly efficient at generating videos with fluid transitions, naturally moving objects, and continuous visual patterns. The competition that has problems dealing with long sequences is not manageable by all; but Hunyuan Video is particularly good at preserving the temporal flow of frames, thus, within such spheres, it is well suited for the task of creating video content for advertising or the education sector.

Key Features and Capabilities

Hunyuan Video has added a number of striking frontrunners features thereby making it the top video generation tool in the market:

High-resolution output supporting up to 1080p resolution

AI/Deep learning features to detect complex movements and interactions in motion

Want to learn more on this? Read a guide on automatic text summarization

A part/side image of a car with different color instead of the yellow car

Customizable style parameters for artistic control

Weights of the model are open-sourced so that community development can be promoted

The architecture of the model has pages that contain both space and time attention weights allowing the model to be consistent with the objects generated and to flow the object motions. This sequencing of innovations brought the visual artifacts they have been facing all through their earlier model generations far below what was then perceived.

Practical Test Outcomes

The application of Hunyuan Video under various conditions proves its effectiveness and current bottlenecks. The AI system is quite effective in simple scenarios such as the sunset over mountains and a busy city street to a level where the images are lifelike and the motion and light changes are perceptible. The model does experience problems in backgrounds due to object occlusion, etc., however, compared to other models, the inconsistencies that arise are less in number.

One notable positive side is the performance of the model when recognizing human-like figures. Although not photorealistic, these characters are more lifelike than the rest and thus more acceptable in the case of explainer videos. Also, they can be used as conceptual demos due to the requirement of accuracy, not being at that point.

Its speed of generation is quite reasonable in case of local deployment with a 5-second clip taking about 90 seconds to finish on a high-end consumer machine. The cloud-based approach seems to be more efficient, thus with the hosting infrastructure, it still remains the main key.

Step-by-Step Usage Tutorial

The use of Hunyuan Video, at first glance, demands code-related troubleshooting, but if the user has a technical background, the process will be quite easy.

Make a copy of the repository from the official Hunyuan GitHub page

Set all the dependencies in place, e.g install PyTorch and CUDA for GPU acceleration

Get the model pre-trained weights

Fill in your text prompt in the provided script

Execute the given command with the text you have written

Check/Process the generated video to ensure it is acceptable

End-users who find command-line tools to be intimidating may prefer web interfaces developed by the community, using a more visually appealing approach that is more clear-cut unlike a command-line which is less so, although more coherent and accessible for sighted or visually impaired users only.

Pros and Cons

Pros: Hunyuan Video is superior to most open-source tools in generating videos that are visually appealing and have coherent motion but at the same time are not visually extravagant.

Cons: To function at the highest level, the model gobbles computational resources, most of which may not be available to some users who may find accessibility limited. The number of resources is significant.

The Hunyuan Video software is a profitable new addition to the open-source AI video-generating project as it marks a new era in the advancement of research field and at the same time serves as a valuable tool in daily functional applications. Since few complexities are present, developers can now easily envision their dream custom video pipelines of their own. These are assets more in demand for video content creators than the one having only high quality.